Detecting and blocking OpenAI crawlers

OpenAI collects data on the internet from web crawlers in order to train their machine learning models. This post describes how to detect traffic from these crawlers and how to block them. To accomplish this, I will use GreyNoise to do some research on the characteristics of the traffic attributed to OpenAI's crawlers. After that, I will demonstrate a simple proof of concept for blocking the traffic using Cloudflare Workers.

What does OpenAI use web crawlers for?

I first started looking into this when I heard that New York Times had updated their robots.txt file to block requests with a "GPTBot" user agent.

Screenshot of New York Time's robots.txt file with included GPTBot user agent. [Source]

OpenAI has not disclosed a great deal of specifics about their data collection operations. However, they have published some information about their "GPTbot" crawler that is apparently collecting the materials used for large-language model training.

The OpenAI documtation states this robots.txt entry will disallow GPTBot:

User-agent: GPTBot

Disallow: /

And on a separate page, OpenAI discloses the IP ranges that GPTBot traffic will be coming from:

20.15.240.64/28

20.15.240.80/28

20.15.240.96/28

20.15.240.176/28

20.15.241.0/28

20.15.242.128/28

20.15.242.144/28

20.15.242.192/28

40.83.2.64/28

Each of these IP blocks are coming from ASN8075, an autonomous system operated by Microsoft Corporation out of Iowa.

Using GreyNoise data to expand visibility of OpenAI crawlers

I have been using GreyNoise Intelligence for a couple of years now, enriching web traffic I observe with their dataset of known scanners, crawlers, and other automated traffic. Assuming that OpenAI has deployed their crawlers against a broad scope of websites, then their is a non-zero probability that we will see some traffic from OpenAI crawlers in GreyNoise's dataset. From there, we can gain further insights into the complexion of the crawler traffic.

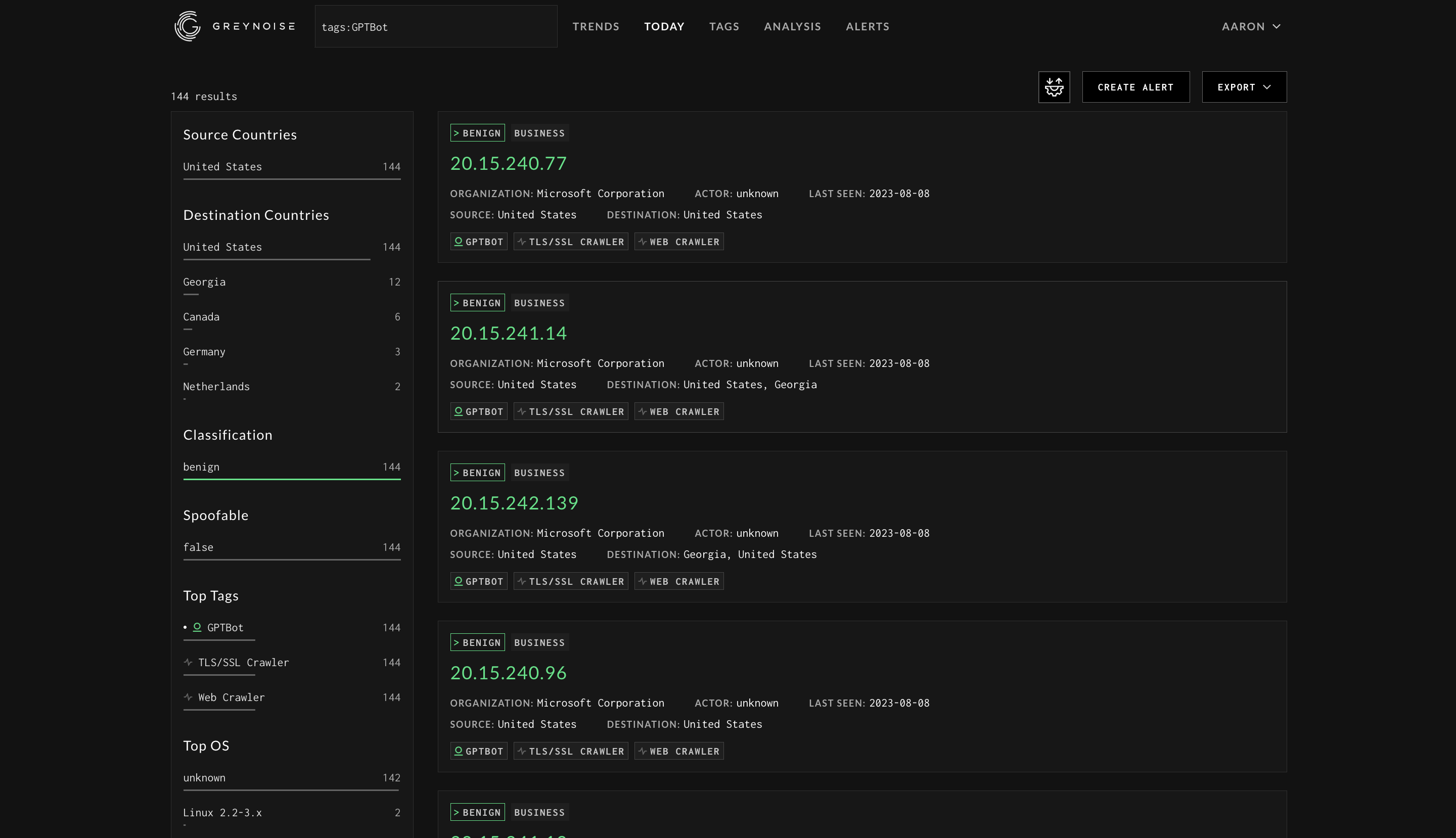

Screenshot of GreyNoise results when searching for traffic tagged as GPTBot. [Source]

Sure enough, the folks at GreyNoise seem to not only have observed traffic from GPTBot, but have created a handy tag for it. This is an easy way to pull up the traffic. So far it looks like the IP addressess that GreyNoise has observed match up with the IP ranges published by OpenAI.

Exploratory data analysis on GreyNoise's observed OpenAI crawler traffic

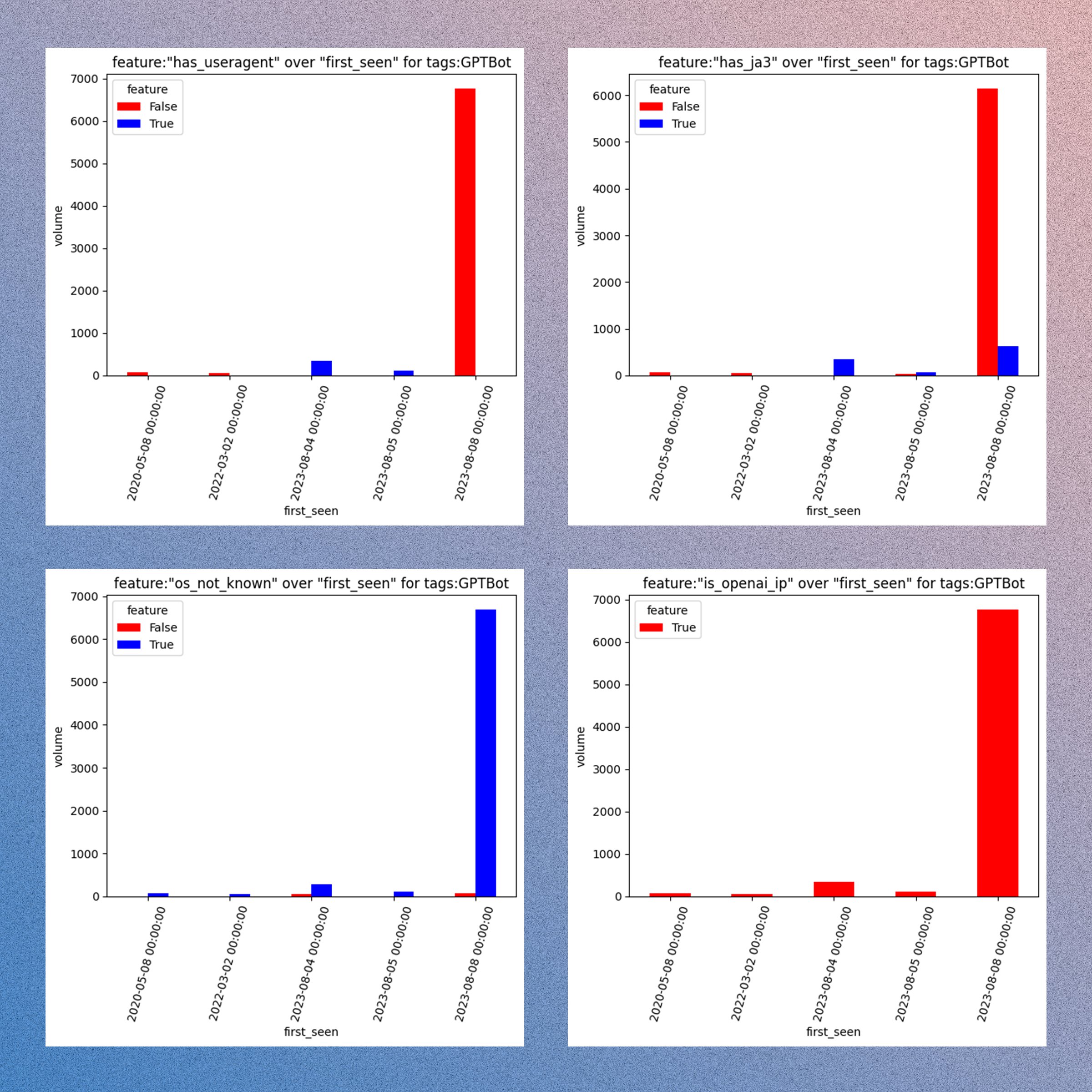

Charts generated when analyzing features of GreyNoise's GPTBot-tagged traffic. [Source]

Caveats

Before I state the conclusions I came to, let me lay down some caveats:

1. This was a small dataset. While there were only about 10k sensor_hits, which isn't that much when you consider global network traffic flows. So any results from the dataset will be fairly assumptive when applied outside of this context.

2. This data is only generated because GPTBot just happened to crawl a GreyNoise sensor. GreyNoise sensors are like honeypots in that they are designed to attract attention from crawlers and threat actors. An individual sensor can mimic various applications, software, and protocols, along with set versions of those things. This is useful when trying to draw the attention of an actor scanning for vulnerable software and hosts. However, one should assume that OpenAI puts a fair amount of targeting when tasking their GPTBot crawlers to collect data. We should assume that a GPTBot crawler is most likely to target a GreyNoise sensor not because that website is associated with GreyNoise (the sensors typically do not self-identify as such), but because the website that the sensor is configured to display has some kind of information that meets OpenAI's collection requirements. It's hard to speculate what these requirements may be, and it's hard to say if what was analyzed here is "typical" GPTBot traffic. That's to say, this subset of traffic is really only a representation of what the GreyNoise sensor observed when it was crawled; it is not a continuous or external scan of known GPTBot infrastructure.

EDA conclusions

That said, here are some conclusions I came to when looking at this sample of data:

GreyNoise sensors likely do not typically attract GPTBot; that will depend on what application/software/protocol that the GreyNoise sensor is configured to mimic.

As a detection method, relying on GPTBot to self-identify via its user agent will result in mixed degrees of precision.

Despite consistent JA3 prints when a JA3 was able to be captured, most of the observed GPTBot-tagged traffic was not accompanied by a JA3 fingerprint.

Traffic tagged as GPTBot by GreyNoise typically did not have an observed operating system.

All traffic tagged by GreyNoise as GPTBot originated from the IP space that OpenAI has publicly disclosed.

Possible detection methods

When present, the top user agent in the traffic tagged by GreyNoise as GPTBot was:

Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)

When present, the top JA3 fingerprint in the traffic tagged by GreyNoise as GPTBot was:

8d9f7747675e24454cd9b7ed35c58707

When present, the top operating in the traffic tagged by GreyNoise as GPTBot was:

Linux 2.2-3.x

The ASN and ASO for all the traffic tagged by GreyNoise as GPTBot was:

AS8075 Microsoft Corporation

Crafting a filter strategy

To be able to block GPTBot traffic, we will need to persistently detect GPTBot traffic. From the above research we have a couple of options, which we will probably need to combine in order to get the best results:

1. Blocking via user agent. This is the most straightforward method, but assumes that the traffic will always self-identify as GPTBot, which we have already identified as not always being the case.

2. Blocking via JA3 fingerprint. This would be a more reliable method if there weren't so many collisions with non-GPTBot traffic. The cipher and extensions data seems too common for this to be a high-precision method.

3. Blocking via IP ranges. Well one thing for sure is that 100% of the traffic observed on GreyNoise did belong within the IP ranges disclosed by OpenAI. This may be the best method for us, and it's not a large volume of addresses in total (126 unique IPv4 addresses).

Filtering out GPTBot traffic with Cloudflare Workers

Cloudflare Workers are serverless functions that can be deployed to Cloudflare's edge network. They can be used to modify HTTP requests and responses, and can be used to block traffic.

A simple Worker that drops traffic from the disclosed GPTBot IP ranges might look like this:

// array of the 126 hosts produced from disclosed ranges

const OPEN_AI_IP_HOSTS = [

...

]

export default {

async fetch(request) {

// get the IP address of the incoming request

const INCOMING_IP = request.headers.get('cf-connecting-ip');

// if the IP address is in the disclosed ranges, return a 403

if (OPEN_AI_IP_HOSTS.includes(INCOMING_IP)) {

return new Response(null, { status: 403 });

}

// otherwise, return a 200 and some text

return new Response('Rage against the machine!', { status: 200 });

},

};

Obviously there are all sorts of ways that traffic can be filtered with Cloudflare, not to mention their simple Firewall Rules feature.

People also use Cloudflare Pages to host static sites (like this website!). There is a Workers-like capability that Pages users can use, though it takes a bit of adapting to make a Worker function run in Pages. Pages Functions run before serving the static site, so it's a super handy way of creating your own software-defined firewall for your static site.

To do this, you would need to create a functions directory in your Pages project, and then create a file called _middleware.js in that directory. The function code for _middleware.js would look something like this:

// array of the 126 hosts produced from disclosed ranges

const OPEN_AI_IP_HOSTS = [

...

]

export async function onRequest(context) {

const request = context.request

// get the IP address of the incoming request

const INCOMING_IP = request.headers.get('cf-connecting-ip');

// if the IP address is in the disclosed ranges, return a 403

if (OPEN_AI_IP_HOSTS.includes(INCOMING_IP)) {

return new Response(null, { status: 403 });

}

// continue to serve the static site

return await context.next()

}

Say we wanted to block on user agent instead. We could do something similar to the code above, but capture the incoming user agent:

const INCOMING_UA = request.headers.get('user-agent');

From there we would probably look for the string GPTBot in the user agent, and if it's present, return a 403. Same thing for JA3:

const INCOMING_JA3 = request.cf.botManagement.ja3Hash

These methods can even be layered and priortized, so that if one method fails, the next one is tried.

Conclusion

We didn't really get into the "why?" in this post, and that's because it's up to you to decide how your data gets used. I mean hey, why stop at GPTBot? We can use this same method to block traffic from any IP range, user agent, or JA3 fingerprint. We can even use this method to block traffic from any ASN or ASO. We might need to get creative as it seems everyone is collecting all sorts of junk from the web in order to train their large language models!

Let me know if this pointed you in the right direction in your own research.

PostScript: Thanks to the folks at GreyNoise and their support for the research community!